Overview

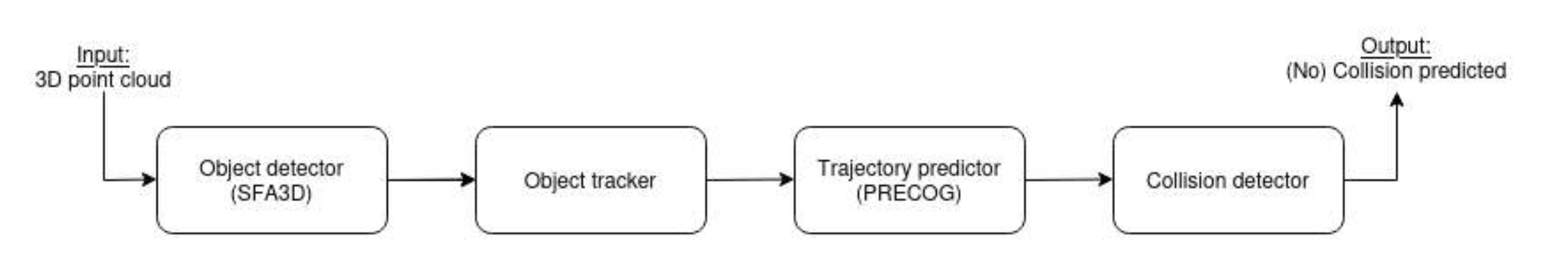

The CycleSafely pipeline processes multi-modal sensor data in real-time to detect vehicles, predict their trajectories, and alert cyclists of potential dangers. The four-stage pipeline consists of: Object Detection, Object Tracking, Trajectory Prediction, and Collision Risk Assessment.

The system supports two deployment modes: a full desktop/embedded version with comprehensive sensors and processing, and an optimized mobile version for smartphones that prioritizes real-time performance on resource-constrained devices.

Stage 1: Data Acquisition and Sensor Fusion

Sensor Input

The system captures synchronized multi-modal sensor data from cameras, LIDAR, GPS, and IMU sensors to build a comprehensive understanding of the cyclist's environment and surrounding traffic.

- Desktop/Embedded: Velodyne HDL-64E or Livox Mid-360 LIDAR for high-resolution 3D point clouds, RGB-D camera for high-quality visual data, and Insta 360 camera for panoramic capture.

- Mobile: Smartphone camera (mounted on handlebar) with adaptive resolution based on processing load, optional external sensors via Bluetooth/USB.

Registration and Localization

GPS and IMU data are fused with visual odometry to precisely determine the cyclist's position, orientation, and velocity in real-time, enabling accurate coordinate transformations and map alignment.

- Desktop/Embedded: ORB-SLAM3 for robust visual odometry and registration, point cloud registration and alignment with OpenStreetMap data.

- Mobile: Simplified visual odometry using feature tracking, compass-based orientation estimation, and GPS+IMU fusion with Kalman filtering.

Stage 2: Object Detection (SFA3D/YOLO3D)

Vehicle Detection

Deep learning models identify vehicles in the surrounding environment by analyzing sensor data to create 3D bounding boxes, achieving real-time detection rates of over 10 frames per second on both platforms.

Real-time object detection showing bounding boxes around detected vehicles

- Desktop/Embedded: SFA3D (Super Fast and Accurate 3D Object Detection) using PyTorch processes LIDAR point clouds converted to Bird's-Eye View (BEV) representations, combined with YOLOv4/YOLO3D for RGB image detection and vehicle surface reconstruction.

- Mobile: Quantized YOLO3D model (INT8) with GPU/NPU acceleration (Metal/CoreML on iOS, TensorFlow Lite on Android), monocular depth estimation using LiteDepth, and frame skipping during low-risk scenarios to conserve battery.

Stage 3: Object Tracking (3D Multi-Object Tracker)

Multi-Vehicle Tracking

Detected vehicles are tracked across consecutive frames using Kalman filtering to maintain consistent identities, handle occlusions, and compute distances with low identity switch rates for reliable monitoring.

Trajectory Analysis

The system analyzes vehicle motion by computing speed and acceleration from GPS and IMU data, calculating relative velocities, fitting smooth path curves, and classifying driver behaviors such as passing, turning, or crossing maneuvers.

Trajectory extraction showing vehicle paths over time

Stage 4: Trajectory Prediction and Risk Assessment

PRECOG Trajectory Prediction

The PRECOG (PREdiction Conditioned On Goals) framework forecasts future vehicle trajectories over 1-3 seconds by generating multi-modal predictions that consider vehicle dynamics, driver intentions, environmental constraints, and interactions between multiple agents.

PRECOG trajectory prediction showing future vehicle paths

- Desktop/Embedded: Full PRECOG framework using TensorFlow with multi-agent trajectory prediction, goal-conditioned forecasting based on likely destinations, and consideration of road boundaries and traffic rules.

- Mobile: Compressed PRECOG model or heuristic-based prediction with simplified risk assessment (distance and speed based) focusing on immediate threats with a shorter prediction horizon.

Collision Risk Assessment

Predicted trajectories are analyzed against the cyclist's path to calculate time-to-collision (TTC), estimate collision probability, detect safety distance violations (1.5m threshold for vehicles exceeding 30km/h), identify traffic law violations, and classify collision severity levels.

Stage 5: Output, Alerts, and Recording

User Interface and Alerts

The system provides multi-modal warnings through visual displays, audible alerts, and haptic feedback to ensure cyclists are immediately aware of imminent dangers while maintaining focus on the road.

- Desktop/Embedded: Real-time display on tablet showing distance warnings and visualization of predicted vehicle paths.

- Mobile: Simple visual indicators on phone screen with glanceable display design optimized for cycling safety, plus vibration alerts for close passes.

Data Recording and Analysis

Continuous video buffering automatically records the last N seconds before incidents, stores localized collision statistics, and uploads anonymized data (with faces and license plates blurred) for post-incident reconstruction and safety research.

- Desktop/Embedded: High-quality continuous video buffer with immediate upload capability for comprehensive analysis.

- Mobile: Rolling buffer recording limited by storage constraints, with background upload when connected to WiFi to conserve mobile data.

Mobile Optimizations (BA Kozonits)

Key optimizations enabling real-time processing on smartphones:

- Model Quantization: INT8 quantization reducing inference time by 3-4x

- Model Compression: Pruning redundant parameters and knowledge distillation

- Efficient Processing: Selective processing of frames and regions of interest

- Asynchronous Pipeline: Parallel processing stages for better performance

- Battery Optimization: Battery-aware operation modes and adaptive resolution

- Temporal Coherence: Exploiting frame-to-frame consistency

System Integration

Both pipeline variants integrate with external systems and tools:

- OpenStreetMap: For road geometry, lane markings, and semantic map data

- Cloud Backend: For anonymized data collection and aggregated statistics

- Post-Processing Tools: For incident reconstruction and detailed analysis

- Visualization Platform: For displaying collision statistics, road widths, and dangerous locations (Rerun 3D Viewer)

- CARLA Simulator: For synthetic data generation and testing

Performance Characteristics

Desktop/Embedded

- High accuracy with comprehensive sensors

- Real-time processing >10 FPS

- Precise distance measurements from LIDAR

- Full PRECOG multi-agent prediction

- Requires dedicated computing hardware

Mobile

- Optimized for battery life and thermal management

- Acceptable frame rates on mid-range phones

- Approximate distances from camera

- Simplified prediction models

- Runs on standard smartphone hardware