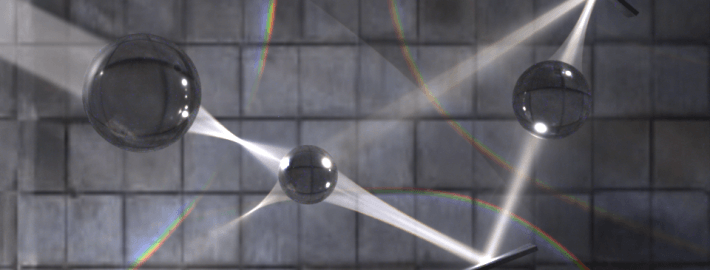

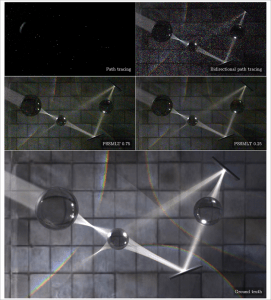

Sophisticated global illumination algorithms usually have several control parameters that need to be set appropriately in order to obtain high performance and accuracy. Unfortunately, the optimal values of these parameters are scene dependent, thus their setting is a cumbersome process that requires significant care and is usually based on trial and error. To address this problem, this paper presents a method to automatically control the large step probability parameter of Primary Sample Space Metropolis Light Transport (PSSMLT). The method does not require extra computation time or pre-processing, and runs in parallel with the initial phase of the rendering method. During this phase, it gathers statistics from the process and computes the parameters for the remaining part of the sample generation. We show that the theoretically proposed values are close to the manually found optimum for several complex scenes.

TLDR: We tried to quantify what it means to have an “easy” or “difficult” scene for light transport. It turns out that we can measure it reasonably reliably with a few simple metrics and create a “blend” between Bidirectional Path Tracing and Metropolis Light Transport to address it.

Paper (full)

Paper (4-page)

Supplementary materials

A possible Blender implementation may be available here. If you know anything about it, tried it or improved it in any way, please let me know!

Update 2017.12.24 – Due to popular request, I have also uploaded the implementation to show how I collected the eta_* statistics in LuxRender. Jump to line 473 in the metrosampler.cpp file to see the relevant collected variables (the notations are faithful to the one presented in the paper). Please note that this implementation I found several years after finishing the project – proceed with care and please let us know if you spot a more recent implementation in the wild or also if you have written one yourself. Mitsuba and pbrt implementations would be the priority (if possible). Thank you!

Update 2018.02.12. – Martin Mautner adds a Mitsuba implementation. Thank you so much! If you spot any mistakes, please let us know.

LuxRender code (for data collection, read note above)

Mitsuba code

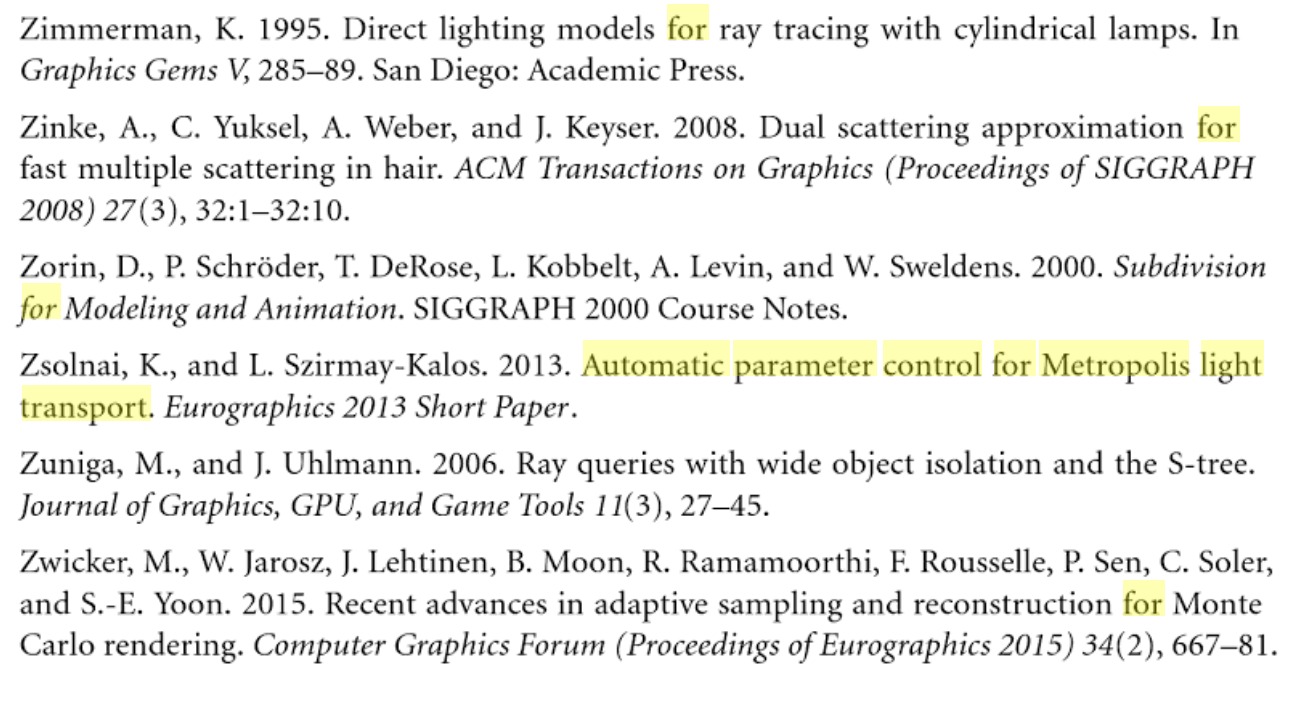

The paper is referenced in the pbrt book (page 1045, third paragraph). I can hardly imagine a higher honor for a rendering paper and had my John Boyega moment when I found out about this. 🙂 It is also referenced in the Handbook of Digital Image Synthesis: Scientific Foundations of Rendering as well. This is certainly not something that I would have expected from a side project next to the Master thesis, thanks for all the love everyone! (new)

Reference in pbrt

Reference in the Handbook

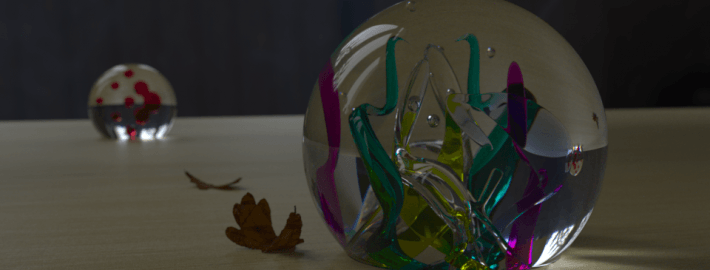

This work has been supported by TÁMOP-4.2.2.B-10/1-2010-0009 and OTKA K-104476. We thank Kai Schwebke for providing LuxTime, Vlad Miller for the Spheres, Giulio Jiang for the Chess, Aaron Hill for the Cornell Box, Andreas Burmberger for the Cherry Splash and Glass Ball scenes.

@inproceedings{Zsolnai13mlt,

crossref = {short-proc},

author = {Károly Zsolnai and László Szirmay-Kalos},

title = {{Automatic Parameter Control for Metropolis Light Transport}},

pages = {53-56},

URL = {http://diglib.eg.org/EG/DL/conf/EG2013/short/053-056.pdf},

DOI = {10.2312/conf/EG2013/short/053-056},

abstract = {Sophisticated global illumination algorithms usually have several control parameters that need to be set appropriately in order to obtain high performance and accuracy. Unfortunately, the optimal values of these parameters are scene dependent, thus their setting is a cumbersome process that requires significant care and is usually based on trial and error. To address this problem, this paper presents a method to automatically control the large step probability parameter of Primary Sample Space Metropolis Light Transport (PSSMLT). The method does not require extra computation time or pre-processing, and runs in parallel with the initial phase of the rendering method. During this phase, it gathers statistics from the process and computes the parameters for the remaining part of the sample generation. We show that the theoretically proposed values are close to the manually found optimum for several complex scenes.}

}

@proceedings{short-proc,

editor = {M.- A. Otaduy and O. Sorkine},

title = {EG 2013 – Short Papers},

year = {2013},

isbn = {-},

issn = {1017-4656},

address = {Girona, Spain},

publisher = {Eurographics Association}

}

If there are problems with the citation formatting, just take the one(s) from here.