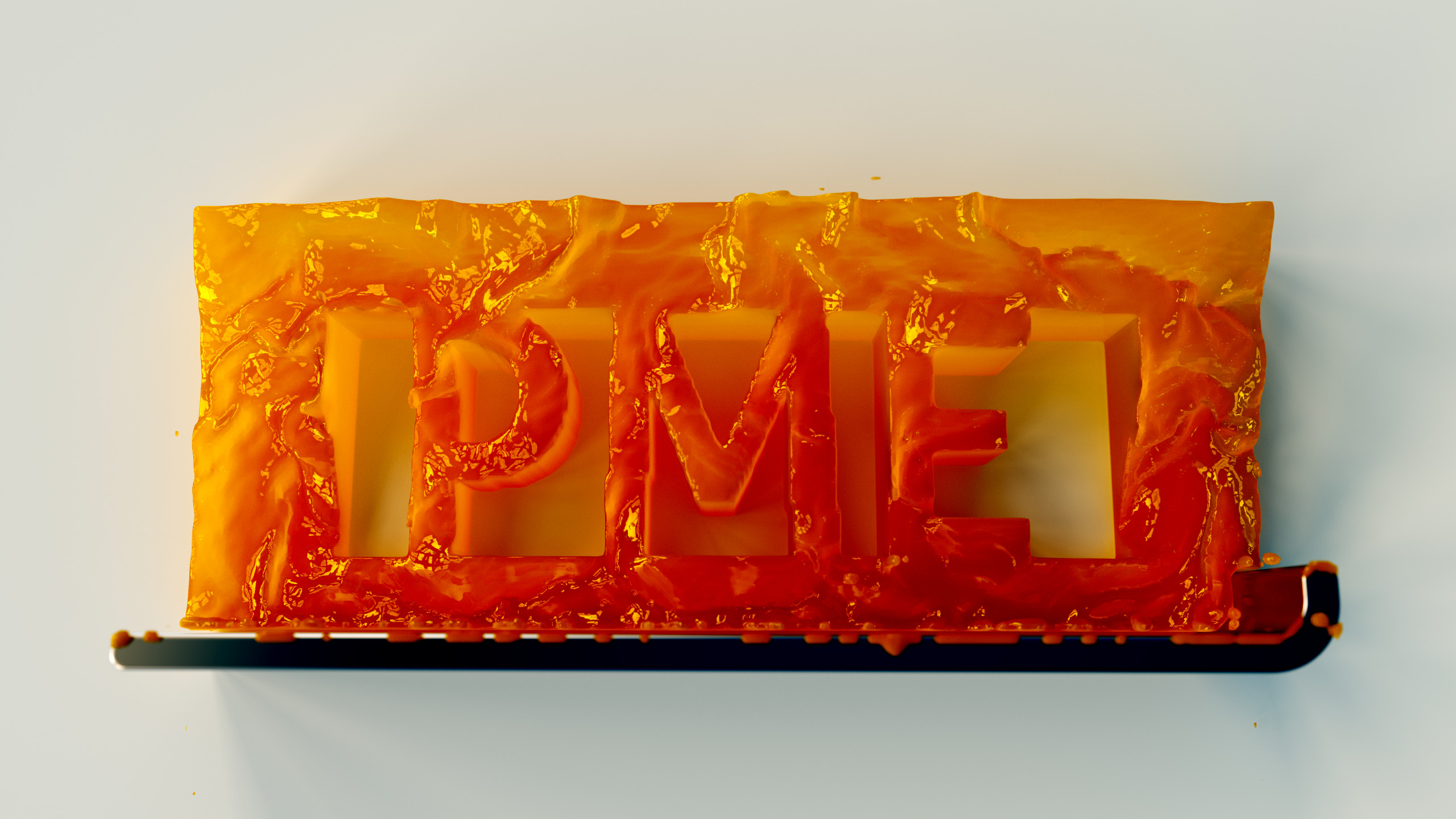

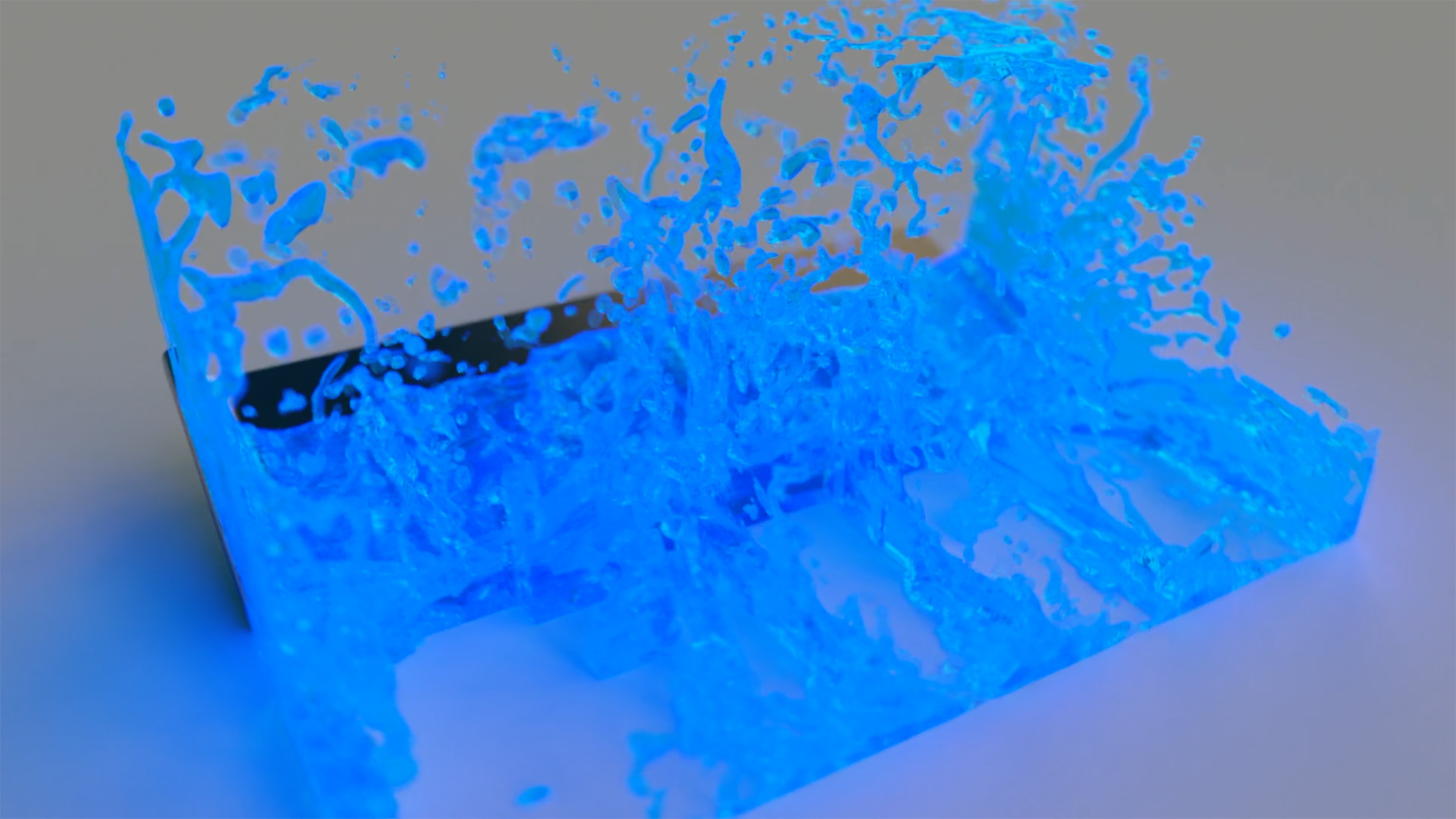

This work enables artists without photorealistic rendering experience to reuse their knowledge in image editing to create a target material. Then, after showing this sample to our learning-based method, it finds the closest photorealistic material to match it. This deceivingly simple combination of a collection of neural network predictions and an optimizer, and outperforms significantly more complex techniques (including Gaussian Material Synthesis), when a handful of materials are sought.

Creating photorealistic materials for light transport algorithms requires carefully fine-tuning a set of material properties to achieve a desired artistic effect. This is typically a lengthy process that involves a trained artist with specialized knowledge. In this work, we present a technique that aims to empower novice and intermediate-level users to synthesize high-quality photorealistic materials by only requiring basic image processing knowledge. In the proposed workflow, the user starts with an input image and applies a few intuitive transforms (e.g., colorization, image inpainting) within a 2D image editor of their choice, and in the next step, our technique produces a photorealistic result that approximates this target image. Our method combines the advantages of a neural network-augmented optimizer and an encoder neural network to produce high-quality output results within 30 seconds. We also demonstrate that it is resilient against poorly-edited target images and propose a simple extension to predict image sequences with a strict time budget of 1-2 seconds per image.

Keywords: neural networks, photorealistic rendering, neural rendering, photorealistic material editing

This paper is under the permissive CC-BY license, except the parts that build upon third-party materials – please check the acknowledgements section in the paper for those. The source code is under the even more permissive MIT license. Feel free to reuse the materials and hack away at the code! If you built something on top of this, please drop me a message – I’d love to see where others take these ideas and will leave links to the best ones here.

I tried making the code as easy to run as possible, however, if you have found a way to make this easier (e.g., a well-tested requirements.txt file, docker container or anything else), please let me know and I will be happy to link to it or include it here. Thank you!

Changelog:

2019/09/12 – Published the tech report.

2019/12/17 – The “Paradigm” scene from the teaser image of this paper won the 2020 Computer Graphics Forum cover contest. What an honor, thank you so much! The geometry of the scene (and some materials, other than the ones highlighted in the teaser image) was made by Reynante Martinez.

2020/06/03 – The paper has been accepted to EGSR 2020. I am working on the video for the full talk, which will appear here hopefully around June 17th (approximate date).

2020/06/17 – The talk video is now available above.

We would like to thank Reynante Martinez for providing us the geometry and some of the materials for the Paradigm (Fig. 1) and Genesis scenes (Fig. 3), ianofshields for the Liquify scene that served as a basis for Fig. 9, Robin Marin for the material test scene, Andrew Price and Gábor Mészáros for their help with geometry modeling, Felícia Zsolnai-Fehér for her help improving our figures, Christian Freude, David Ha, Philipp Erler and Adam Celarek for their useful comments. We also thank the anonymous reviewers for their help improving this manuscript and NVIDIA for providing the hardware to train our neural networks. This work was partially funded by Austrian Science Fund (FWF), project number P27974.

@article{zsolnaifeher2020pme,

title={Photorealistic Material Editing Through Direct Image Manipulation},

author = {K\'{a}roly Zsolnai-Feh\'{e}r and Peter Wonka and Michael Wimmer},

journal={Comput. Graph. Forum},

year={2020},

}

If there are problems with the citation formatting, just take the one(s) from here.